Participating in a Shared Task¶

Note

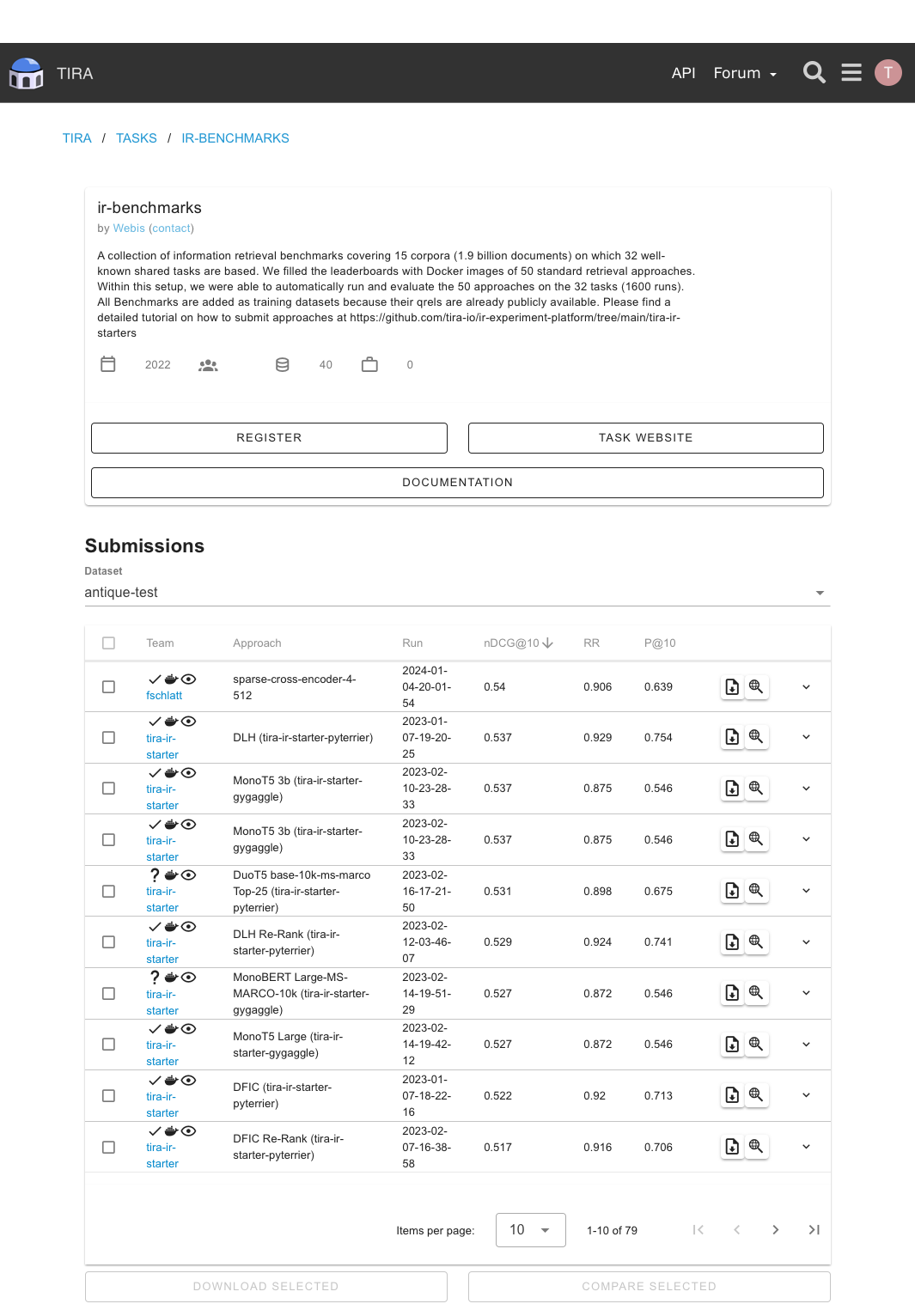

This tutorial assumes that you already created a user account. For the sake of the tutorial, we will create

submissions for the ir-benchmarks task but it works the same for any other task.

Joining a Task and preparing your Environment¶

To join a task, visit tira.io/tasks, search for the task you would like to submit to, and click its name to find the task’s page.

Now you either have a button titled SUBMIT, since you already

registered a group for this task, or it says REGISTER. In the latter case, click on REGISTER, fill out the form

that pops up appropriately, and confirm the registration by pressing Submit Registration. After a reload, you should

see a SUBMIT button.

The task view for ir-benchmarks. The REGISTER button could read SUBMIT, if you are part of a group already.¶

Click on SUBMIT. As you can see now, TIRA has three choices for submission (click on each respective tab to find

out more):

Requirements to your Software¶

TIRA aims to enable double-blind evaluation of ideas and approaches in diverse domains. Therefore, we aim to make minimal requirements to the software that implements your ideas and approaches. We need that software is POSIX compatible and shipped in a Docker image and reads its inputs from a dynamic input directory (via the $inputDataset variable) and writes the results to a dynamic output directory (via the $outputDir variable).

On a high level, your software is compatible if it can be invoked like this:

your-software --input-directory $inputDataset --output-directory $outputDir

Note

Please note that TIRA makes environment variables available so that you do not need to pass the input and output directory via the command line, your software has access to the following environment variables:

inputDataset: Points to the POSIX path containing the input dataset. The format and structure of the contents of this directory depend on your task.outputDir: Points to the POSIX path containing where your software should store its predictions. The expected format structure how your software should store its predictions depends on your task.

Prepare your Submission¶

Code submissions are the recommended form of submitting to TIRA. Code submissions are compatible with CI/CD systems like Github Actions and build a docker image from a git repository while collecting important experimental metadata to improve transparency and reproducibility.

The requirements for code submissions are:

Your approach is in a git repository.

Your git repository is complete, i.e., contains all code and a Dockerfile to bundle the code.

Your git repository is clean. E.g.,

git statusreports “nothing to commit, working tree clean”.

When those requirements are fulfilled, code submissions perform the following steps:

Build the docker image from the git repository while tracking important experimental meta data (e.g., on git, code, etc.).

Run the docker image on a small spot check dataset to ensure it produces valid outputs.

Upload the docker image together with the meta data to TIRA.

Todo

TODO

The upload submission is the simplest form of submitting and requires you to run the evaluation yourself and upload the runfile. As such it has two notable drawbacks such that we discourage from using it:

Participants need access to the dataset. This may not be possible (e.g., due to legal reasons) or desirable (e.g., to avoid that future models profit from the author’s analysis of the dataset).

The result is not verifiable – the organizer can not ensure that your model actually produced the runfile.

Hint

If you want to use the simplest type of submission, we recommend a Code Submission as this works with Github Actions or other CI/CD automations.

Submitting your Submission¶

At this point, you came up with a brilliant idea and would like to submit it to TIRA for evaluation and to take pride in your leaderboard position.

Optional: Uploading Artifacts (e.g., Hugging Face models required by your code)

Submitting

Please install the TIRA client via

pip3 install tira.Please authenticate your tira client using your API key. You get your API key after registration on TIRA on your submit page.

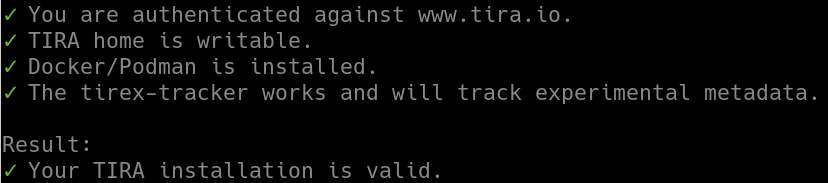

Ensure that your TIRA installation is valid by running

tira-cli verify-installation. A valid output should look like:

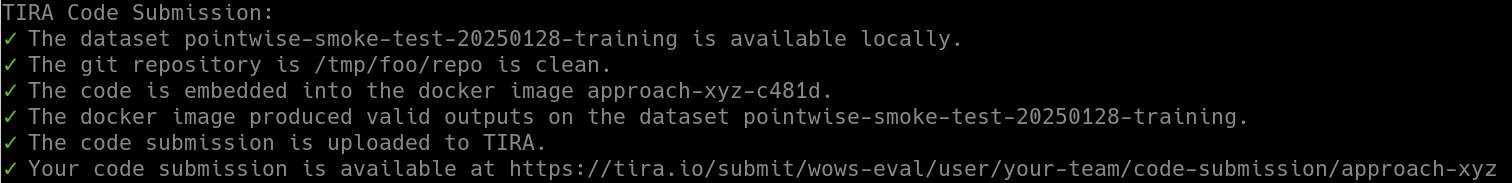

Now you are ready to upload your code submission to TIRA. Assuming that you want to upload to your code in a directory

approach-xyzto the taskwows-eval, the commandtira-cli code-submission --path some-directory/ --task wows-evalwould do the code submission. A valid output should look like:

Todo

TODO

Todo

TODO

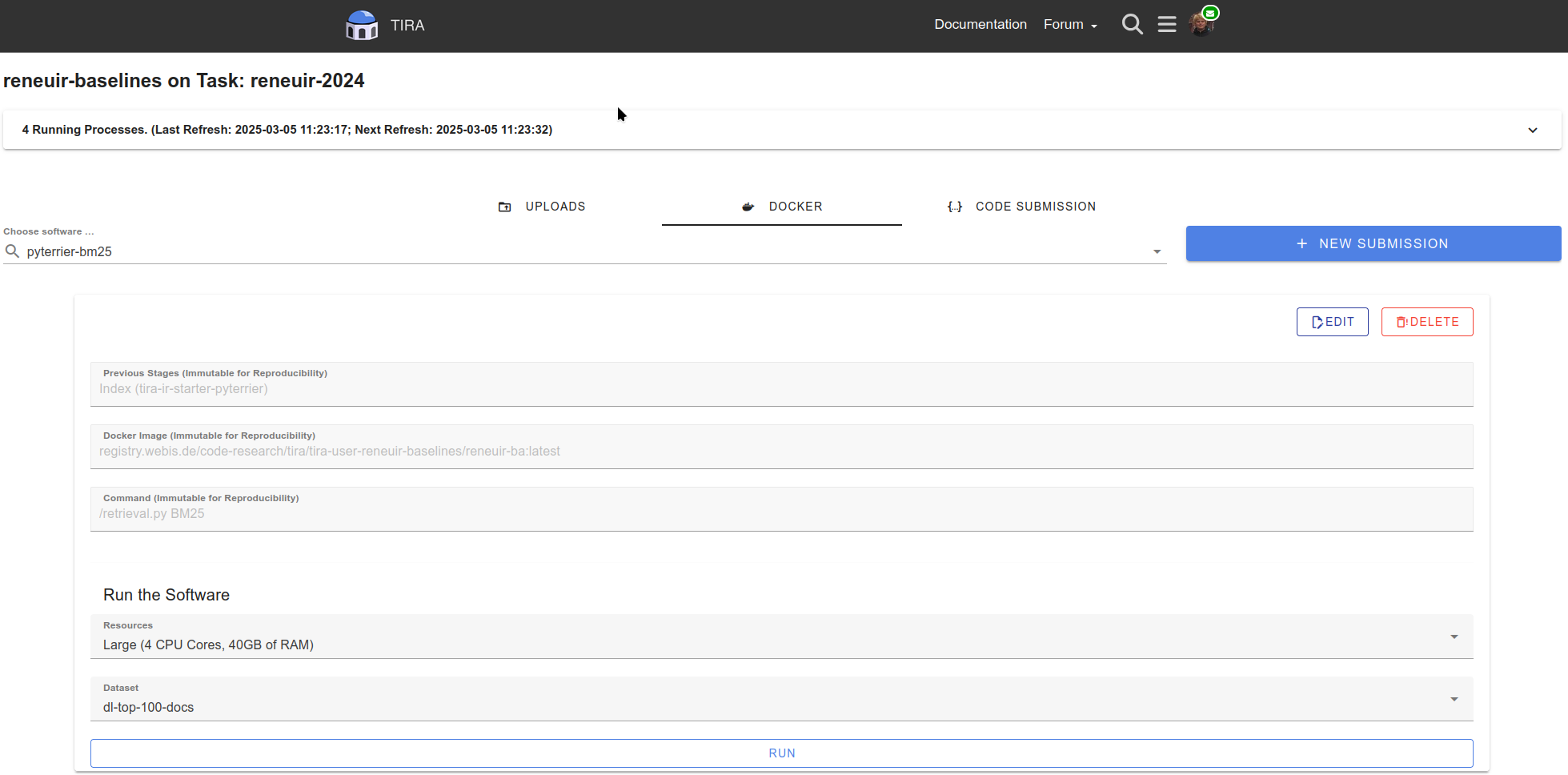

Execute Your Submission¶

Now that you have uploaded your code or docker submission, you can execute it within TIRA (this is not needed for run uploads). Navigate to your task page and select your submission. Then, select the resources and dataset on which your submission should, and click “RUN”:

After your software was executed, you can directly see the outputs and evaluation scores for public training datasets. For private or test datasets, the organizers will manually review the output of your system and will contact you and in case there are errors.